Antalya University

Course Name: Introduction to Data Science Fall 2022

Course Code: CS 447

Language of Course: English

Credit: 3

Course Coordinator / Instructor: Şadi Evren ŞEKER

Contact: intrds@sadievrenseker.com

Schedule: Firday 11.00 – 13.00

Course Description: This course is an introduction level course to data science, specialized on machine learning, artificial intelligence and big data.

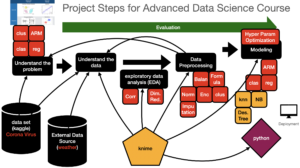

- The course starts with a top down approach to data science projects. The first step is covering data science project management techniques and we follow CRISP-DM methodology with 6 steps below:

- Business Understanding : We cover the types of problems and business processes in real life

- Data Understanding: We cover the data types and data problems. We also try to visualize data to discover.

- Data Preprocessing: We cover the classical problems on data and also handling the problems like noisy or dirty data and missing values. Row or column filtering, data integration with concatenation and joins. We cover the data transformation such as discretization, normalization, or pivoting.

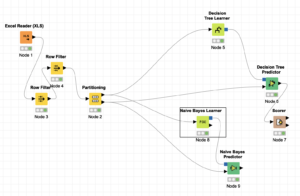

- Machine Learning: we cover the classification algorithms such as Naive Bayes, Decision Trees, Logistic Regression or K-NN. We also cover prediction / regression algorithms like linear regression, polynomial regression or decision tree regression. We also cover unsupervised learning problems like clustering and association rule learning with k-means or hierarchical clustering, and a priori algorithms. Finally we cover ensemble techniques in Knime and Python on Big Data Platforms.

- Evaluation: In the final step of data science, we study the metrics of success via Confusion Matrix, Precision, Recall, Sensitivity, Specificity for classification; purity , randindex for Clustering and rmse, rmae, mse, mae for Regression / Prediction problems with Knime and Python on Big Data Platforms.

Course Objective and Learning Outcomes:

1. Understanding of real life cases about data

2. Understanding of real life data related problems

3. Understanding of data analysis methodologies

4. Understanding of some basic data operations like: preprocessing, transformation or manipulation

5. Understanding of new technologies like bigdata, nosql, cloud computing

6. Ability to use some trending software in the industry

7. Introduction to data related problems and their applications

Tools: List of course software: · Excel, · KNIME, · Python Programming with Numpy, Pandas, SKLearn, StatsModel or DASK This course is following hands on experience in all the steps. So attendance with laptop computers is necessary. Also the software list above, will be provided during the course and the list is subject to updates.

Grading One individual term project and one individual homework track covering all the topics covered in the course : 50%, Homeworks submitted each week : 50% (duration of homework submission is 1 week until the starting time of the class (11.00 a.m), after Friday 11.00 a.m. the submission will be considered as late submission and will not be taken into grading, any attempt against code of honor will result disciplinary actions and fail from the class)

Project Requirements : You are free to select a project topic. The only requirement about the project is, you have to cover at least two topics from the following list and solve the same problem with two separate approaches from the list, you are also asked to compare your findings from these two alternative solutions : KNN, SVM, XGBoost, LightGBM, CatBoost, Decision Trees, Random Forest, Linear Regression, Polynomial Regression, SVR, ARL (ARM), K-Means, DBSCAN, HC

Example project topic: you can search Kaggle for some idea about the projects, you can also find some good data sets from these web sites.

Project proposal : until Apr 30 : please explain your project idea and alternative solution approaches from the course content, together with your data set and outcomes you plan to achieve. Send it in an e-mail with project proposal subject. Your project is very important for the course and possible problems might be related to the data set, algorithms, approaches or your purposes on the project. No late submissions or submissions with misinformation will be replied, so take the risk by your own.

Project Deliverables: You are asked to submit the below items via mail until Dec 22, 2022.

- Presentation and Demo video: please shoot a video for your presentation and demo of your project.

- Project Presentation: slides you are using during the presentation

- Project Report : a detailed explanation of your approaches, the difficulties you have faced during the project implementation, comparison of your two alternative approaches to the same problem (from the perspectives of implementation difficulties, their success rates, running performances etc.), some critical parts of your algorithms. Also provide details about increasing the success of your approach. Please answer all of those questions in your project report: what did you do to solve the unbalanced data if you have in your problem? what did you do to solve missing values, dirty or noisy data problems? did you use dimension transformation like PCA or LDA, why? did you check the underfitting or overfitting possibility and how did you get rid of it? did you use any regularization? did you implement segmentation / clustering before the classification or prediction steps, why or why not? Which data science project management method did you use (e.g. SEMMA, CRISP-DM or KDD?) why did you pick this method? Which step was the most difficulty step and why? How did you optimize the parameters of your algorithms? What was the best parameters and why? how did you found these parameters and do you think you can use same parameters for the other data sets in the future for the same problem?

- Running Code or Project: you are free to implement your solution in any platform / language. The only requirement about your implementation is, you have to code the two alternative solution on the same platform / programming language (otherwise it will not be fair to compare them). Please also provide an installation manual for your platform and running your code.

- Interview: A personal interview will be held after the submissions. Each of you will be asked to provide a time slot of at least 30 minutes for your projects. During this time, you will be asked to connect via an online platform and show your running demo and answer the questions. Please also attach your available time slots to your submissions.

Project Policies: There will be no late submission policy. If you can solve a problem with only 1 approach, which also means you can not compare two approaches, will be graded with 35 points over 100 max. So, please push yourselves to submit two separate approaches for your problem. You are free to use any library during your projects, you are not allowed to use a library or any code on the internet or written by anybody else on the AI part of your project only. So, in other words, you have to write the two different AI module for your project with two different approaches from the course content and using somebodyelse’s code in the AI module will get 0 as the final grade.

Course Content:

| Week 1 (Sep 30): Introduction to Data, Problems and Real World Examples:Some useful information:DIKW Pyramid: DIKW pyramid – WikipediaCRISP-DM: Cross-industry standard process for data mining – Wikipedia Slides from first week:week1

Install Anaconda until next class from anaconda.org :

|

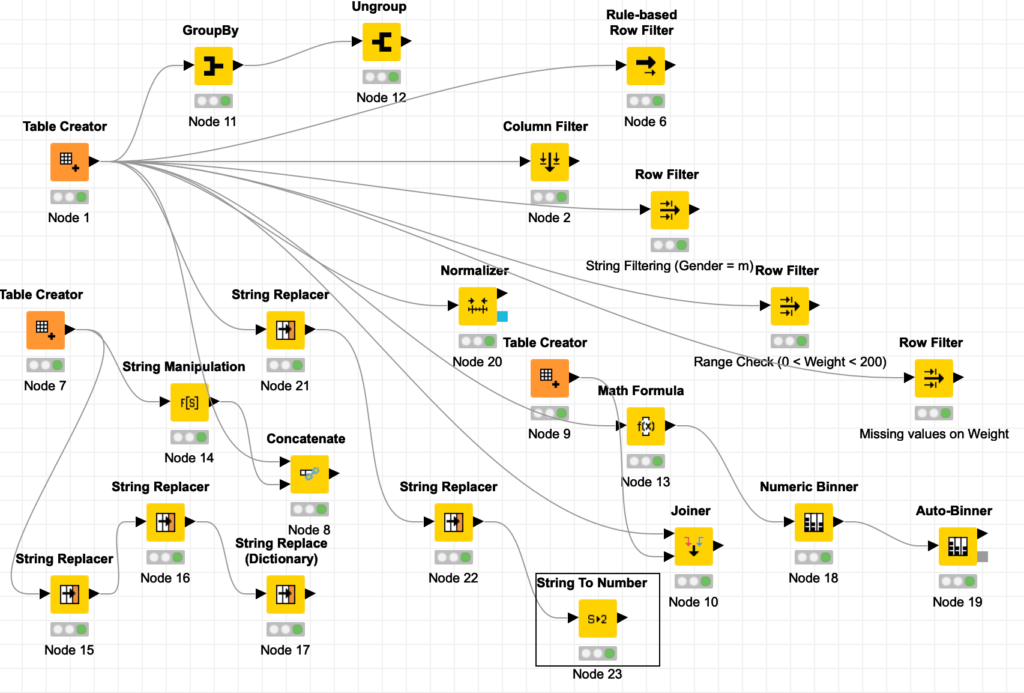

Week 2 (Oct 7): Introduction to Data Manipulation and Data Prepration : Introduction to python, numpy, pandas libraries, some basic operations for :

-

- Row Filter and Concept of Missing Values

- Column Filter

- Advanced Filters

- Concatenate

- Join

- Group by , Aggregation

- Formulas, String Replace

- String Manipulation

- Discrete, Quantized Data, Binning

- Normalization

Codes in python :

Click here to download codes and data file.

Some useful links we referred during the class:

- https://docs.python.org/3/tutorial/index.html

- https://pandas.pydata.org/docs/reference/index.html

- https://scikit-learn.org/stable/

- https://scikit-learn.org/stable/modules/generated/sklearn.tree.DecisionTreeClassifier.html

- https://scikit-learn.org/stable/modules/generated/sklearn.tree.plot_tree.html

- https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.MinMaxScaler.html

Also useful resources from last years:

Introduction to Python Programming for Data Science and an end-to-end Python application for data science Brief review of python programming Introduction to data manipulation libraries: NumPY and Pandas Introduction to the Sci-Kit Learn library and a sample classification You can install anaconda and Spyder from the link below: Also we have covered below topics during the class:

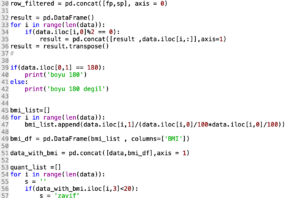

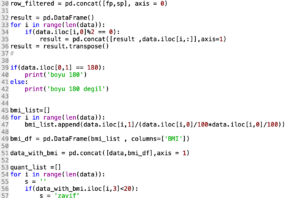

- Data loading from external source using Pandas library (with read_excel or read_csv methods)

- DataFrame slicing and dicing (using the iloc property and the lists provided to the iloc method)

- Column Filtering (with copying into a new data frame)

- Row Filtering (with copying into a new data frame)

- Advanced row filtering (like filtering the people with even number of heights)

- Column or row wise formula (we have calculated the BMI for everybody)

- Quantization (discretization or binning): where we have applied the condition based binning

- Min – Max Normalization (we have implemented MinMaxScaler from the SKLearn library)

- Group By operation (we have implemented the groupby method from pandas library)

Click here to download the codes from the class For further information I strongly suggest you to read the below documentations: Click here to download the codes from the class For further information I strongly suggest you to read the below documentations:

Homework 2 : create a new excel (or CSV) file for an imaginary classroom and put your own unique data where the columns will be studentID, name, midterm and final grades of students. The data file should contain at least 20 rows without missing data. Create another excel (or CSV) file and put studentID and project columns and fill the imaginary project grades for the students. Complete the below steps for your first homework:

- Create 2 dataframes for each of the files.

- Join both files into a single data frame.

- Get the name of the students with maximum grades for each midterm, final and projects.

- Find the average, maximum and minimum grades for midterm, final and projects.

- Normalize all grades with min-max normalization.

- Sort the dataframe by the name of students lexiconically.

Submit your homeworks to the email of course in a zip file including your data files (excel or csv) and your python code together. |

| Week 3 (Oct 14): Introduction to Data Manipulation Concept of Data and types of data : Categorical (Nominal, Ordinal) and Numerical (Interval, Ratio). Supervised / Unsupervised learning, Concept of Classification. Algorithm dominance (between rule based learning and decision tree as a sample), KNN Algorithm.

KNN Code

Some useful links covering the course content:

Homework 3: solve your problem with KNN algorithm and compare the outcomes of decision tree and KNN, comment about the success rates. |

| Week 4 (Oct. 21):

Deadline for project proposals: Please prepare a couple of paragraphs to introduce your term project idea. Add the problem you want to solve, data set you want to use.

Machine Learning Algorithms: SVM, KNN (repeat), Decision Tree (Repeat),Naive Bayes

Week 4 Coding for Classification algorithms (Mushroom Data Set From Kaggle) and evaluation

Week 4 coding for Iris Data Set

Concept Covered:

- https://scikit-learn.org/stable/modules/generated/sklearn.metrics.accuracy_score.html

- https://scikit-learn.org/stable/modules/generated/sklearn.svm.SVC.html

- https://scikit-learn.org/stable/modules/naive_bayes.html

- https://scikit-learn.org/stable/modules/generated/sklearn.metrics.confusion_matrix.html

Homework 4 : Implement SVM, NB, Confusion Matrix and Accuracy Score calculations to your previous codes. |

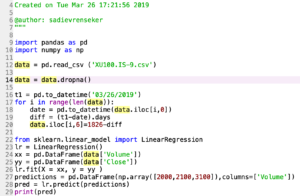

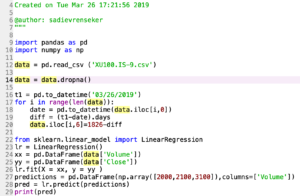

| Week 5 (Oct 28): Classification Algorithms and Regression (Prediction Algorithms) concepts of classification algorithms, implementing the algorithms

Logistic Regression, Linear Regression, Multiple Linear Regression

Concepts Covered:

- https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LogisticRegression.html

- https://scikit-learn.org/stable/modules/generated/sklearn.linear_model.LinearRegression.html

- https://scikit-learn.org/stable/modules/linear_model.html

- https://scikit-learn.org/stable/modules/generated/sklearn.metrics.mean_squared_error.html

- https://scikit-learn.org/stable/modules/generated/sklearn.metrics.mean_absolute_error.html

Week 5 Codes

Homework 5 : Implement Linear Regression and Logistic Regression algorithms to your previous codes.

|

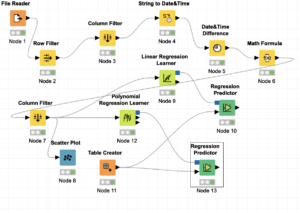

Week 6 (Nov 4): Regression Algorithms concepts of prediction algorithms, implementing the algorithms in Knime and coding in python. Algorithms covered are: Linear Regression Polynomial Regression Support Vector Regressor Regression Trees and Decision Tree Regressor Python code for the Regression

Concepts Covered:

- https://scikit-learn.org/stable/modules/tree.html

- https://scikit-learn.org/stable/modules/generated/sklearn.tree.DecisionTreeRegressor.html

- https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestRegressor.html

- https://scikit-learn.org/stable/modules/generated/sklearn.svm.SVR.html

Week 6 Codes

Homework 6: Implement DTR, RFR and SVR on your homework dataset, also play with the parameters of the algorithms and try to find the best MAE and RMSE values without overfit. |

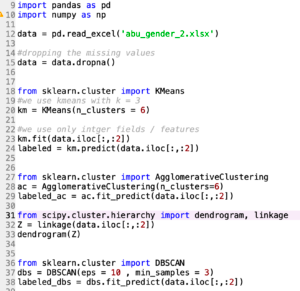

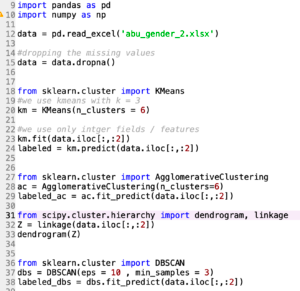

| Week 7 (Nov 11): Clustering Algorithms concepts of clustering algorithms, 2 types of clustering approaches : Hard Clustering and Soft Clustering, 4 Types of clustering algorithms : Centroid, Density Based, Statistical Distribution, Hierarchical. Evaluation of clustering algorithms, WCSS, Silhouette, Elbow Technique.

K-Means Clustering algorithm and concept of clustering.

- https://scikit-learn.org/stable/modules/clustering.html

- https://scikit-learn.org/stable/modules/generated/sklearn.cluster.KMeans.html

- https://scikit-learn.org/stable/modules/generated/sklearn.metrics.silhouette_score.html

Python Codes from this year

Python Code from last year :

Homework 7: Implement clustering algorithm on your homework scenario. You can implement clustering in the preprocessing phase for the data quality or you can benefit from clusters in the machine learning part. |

| Week 8 (Nov 18) : Clustering Algorithms (Cont.) DBSCAN, Hierarchical Clustering, Agglomerative Clustering and their implementations.

Homework 8: Implement DBSCAN, Hierarchical Clustering and Agglomerative Clustering algorithms, besides the K-Means algorithm from last week. Compare their success and execution performance.

Codes from 8th week

|

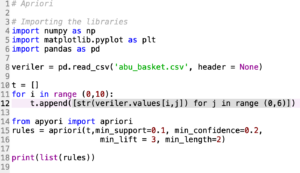

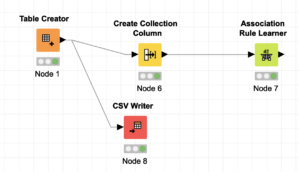

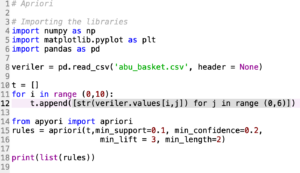

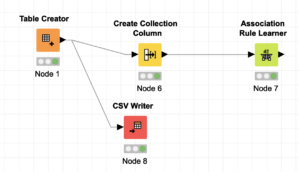

Week 9 (Nov 25) Association Rule Mining concepts of association rule mining (ARM) and association rule learning (ARL) algorithms, implementing the algorithms in Knime and coding in python. Algorithms covered are: A-Priori Algorithm Click Here To Download Apyroiri Library for the Python Codes click for python code click for python code  click for knime workflow Homework : Link for Kaggle, instacart click for knime workflow Homework : Link for Kaggle, instacart

Homework 9: Implement Association Rule Mining code with a-priori, fp-growth or éclat and compare their success and execution times. (Deadline postponed to 9 December 2022 , update: create your own unique data set).

|

| Week 10 (Dec 2): Concept of Error and Evaluation Techniques

n-Fold Cross Validation , LOO, Split Validation RMSE, MAE, R2 values for regression RandIndex, Silhouet, WCSS for clustering algorithms Accuracy, Recall, Precision, F-Score, F1-Score etc. for classification algorithms

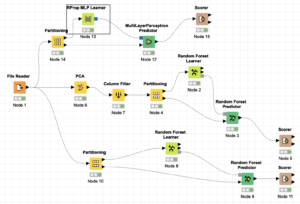

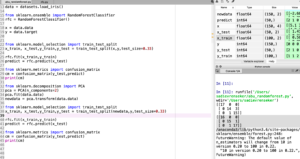

We also got an introduction to dimension reduction with PCA (principal component analysis)

Python codes for the Cross Validation

Homework 10: Re-implement all the classification and prediction codes in all your homeworks and try to increase the success rates by using preprocessing techniques. Also discuss the evaluation techniques while comparing the preprocessing techniques.

|

Week 11 (Dec 9): End-to-End practice with Data Preprocessing : We have randomly picked a data set from Kaggle and solved the problem with new concepts such as PCA, Normalization and Encoding techniques. The concepts covered are listed as below:

- https://scikit-learn.org/stable/modules/generated/sklearn.decomposition.PCA.html

- https://imbalanced-learn.org/stable/references/generated/imblearn.over_sampling.SMOTE.html

- https://scikit-learn.org/stable/modules/generated/sklearn.discriminant_analysis.LinearDiscriminantAnalysis.html

- https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.OneHotEncoder.html

- https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.LabelEncoder.html

Codes from the class

Homework 11: re-implement the previous submissions with preprocessing steps with checking suitable encoding, balancing, scaling and dimension reduction techniques. |

| Week 12 (Dec 16): Collective Learning and Consensus Learning and Clustering Algorithms: Ensemble Learning, Bagging, Boosting Techniques, Random Forest, GBM, XGBoost, LightGBM Some links useful for the class:

Readings and resources:

Python Codes from the class : Gradient Boosting:

XGBoost (for running the code install XGBoost by the command prompt: conda install -c conda-forge xgboost Install XGBoost extension for Knime

Homework 12: Re-implement every classification and regression code with ensemble learning techniques. Compare the success rates and execution speeds in a table (put all the algorithms you have implemented until now to the rows and evaluation metrics to the columns and compare the outcomes in a table).

Week 12 (Dec 16): Deep Learning:

Homework 12: Re-implement every classification and regression code with deep learning techniques. Compare the success rates and execution speeds in a table (put all the algorithms you have implemented until now to the rows and evaluation metrics to the columns and compare the outcomes in a table). Also compare the positive or negative effects of preprocessing steps and parameters into the table and discuss the outcomes. |

| Week 13 (Dec 23):

Project Presentations Presentations will be picked randomly during the class and anybody absent will be considered as not presented. Project Deliveries (until Dec 22): Project Presentation, Project Report (explaining your project, your approach and methodologies, difficulties you have faced, solutions you have found, results you have achieved in your projects, links to your data sources). python codes (in .py format). Please make all these files a single .zip or .rar archive and do not put more than 4 files in your archive.

There is no late submission policy! |

| Week 14( May 12): TBA |

| Week 15( May 19): TBA |

Recent Comments